It was a big day in the lab – January 22, 2024, Kevin Black and I are providing a live demo of his robotic learning system to Hannah Fry, who is compiling a documentary on superintelligence. Our robot is hardly superintelligent, it would be outwitted by any housecat. But we hooked up speech to text, and it’s showtime.

In a live robot demo, it’s hard to resist the temptation to challenge the machine with a new problem. Our guest takes off her watch – “put the watch on the towel.” The robot casts its arm around aimlessly. I’m good at keeping cool under pressure, but in my head I’m already scrambling to find a way to steer the demo to something more manageable. Kevin is quicker: “it’s good with colors, tell it that it’s the brown leather watch.” The trick works, to my amazement the robot moves haltingly toward the watch, grasps it by the belt, and drops it gingerly on the towel. “The diffusion model is good with colors” – this model, which we nicknamed SuSIE, combines image synthesis via diffusion with robotic control. A diffusion model pretrained on Internet-scale language-driven image generation “imagines” goals for the robot based on the human command, and the robot uses a learned policy to reach it. Glancing at the screen, I see the imagined subgoal: a small deformed brown teddy bear on a cyan towel, with the gripper hovering triumphantly nearby.

This robot is using a kind of robotic foundation model, trained on both Internet-scale image-language data and robotic data that we collected in the lab. The image-language data allows the robot to understand visual features, and how language commands should map onto desired outcomes. The robotic data that we collected in the lab allows the robot to figure out how movements of its arm affect the objects in the scene. Putting the two together gives us a robotic system that can understand language and take actions to fulfill user commands. These ingredients – web-scale training for semantic understanding and generalization, combined with robotic data for grounding this understanding in the physical world – represent a small scale prototype for a general recipe for robotic foundation models. But this simple prototype also raises some important questions: how do we go from these kinds of small-scale laboratory demonstrations to truly capable robotic systems? And what data will these systems be trained on?

Where will we get the data to train our robots?

The robotics community is split on where the data to train general-purpose robotic foundation models will come from, with the most prominent schools of thought broadly categorized as: (1) simulation; (2) YouTube; (3) actual robots. While it’s always easy to argue for compromises and all sources being valuable, I believe that in the end it’s the real robot data (3) that will be the biggest enabling factor for real-world robotic learning systems. However, while there is a great argument for why this perspective is appealing, it has been harder in the past to argue why it is also pragmatic and realizable in the near term.

Proponents of simulation-heavy or video-heavy approaches often start from the assumption that the data requirements for learning from robotic data are simply far too large. We currently lack an accurate estimate of the data required for a robot to match the physical capabilities of a four-year-old, much less an adult. Fortunately, once robotic learning methods are good enough to do something useful with enough robustness and generality to be deployed in real-world settings, the problem of data availability largely disappears. Any deployed robotic system is going to produce so much embodied experience once it is deployed at scale that other sources of data – even those that are at “Internet scale,” – will pale in comparison. As an example, if every McDonald’s in the US (about 10,000) puts just one robot in the kitchen working for just two hours per day, this will generate about 10,000,000 hours of experience in a single year.

That means that, if we can build learning-enabled robots that can be deployed at scale in open-world settings, robotic data becomes the uncontested winner for the optimal data source for embodied systems. In fact, it becomes such a good data source that it seems probable that it will filter back into non-embodied systems that are currently trained on data from the web: with tens or hundreds of thousands of robots interacting with the physical world every day, using “hand-made” content from the Internet for training LLMs and VLMs might seem quaint in retrospect. But this argument is circular, and we still need a way to start this flywheel.

The Missing Links: Internet-Scale Pretraining with Cross-Embodiment Finetuning

Kevin’s robot was able to pick up the watch not because it had ever seen a watch in its training data, but because it used a model that was pretrained on Internet-scale data that gave it an understanding of how words relate to images. The same lesson can be drawn from the success of RT-2 and OpenVLA – large vision-language models that are finetuned for robotic control and inherit the semantic reasoning capabilities of multimodal language models. This is an important part of the puzzle – one of the two missing links that will enable us to start the data flywheel in robotics. But Internet-scale training is not enough by itself. It can make a robot “book smart,” but just like you can’t become an expert tennis player by watching Roger Federer, the robot won’t become an expert grasper of brown leather watches no matter how many images or videos it is pretrained on. The key to the effectiveness of Internet-scale pretraining is the ability to “glue” pretrained models to the robot in the right way, so that their capabilities transfer effectively into a grounded embodied model. Early results show that there are a few good ways of doing this. For example, we can reframe manipulation problems in a way that is amenable to interpretation by VLMs (e.g., as in MOKA or PIVOT), and we can construct synthetic examples to provide chain-of-thought reasoning for physical situations, a bit like how LLM researchers craft examples designed specifically to elicit problem solving or coding skills. The key idea behind these approaches, as my colleague Karol Hausman puts it, is to enable robots to “ride the wave” of Internet-scale foundation models.

But riding the wave requires a surfboard that is suitable for robots, and that means robotic data – enough of it to connect the disembodied knowledge in LLMs, VLMs, and diffusion models to the nitty-gritty details of physical grippers, contact forces, and joint angles. The key here will be in using diverse data from many robots. In the same way that it would be crazy to build a computer vision system with images from a single camera type, it will never be scalable to build a robotic foundation model on data from a single robot type. Training our models on data from many different robots will not only make these models usable for all sorts of robotic platforms, but it will help us address a major bootstrapping challenge: if we have strength in numbers, we can collect enough data with enough diversity to fashion our surfboard and ride the wave of Internet-scale pretraining. Even today, combining robotic manipulation datasets as part of the Open X-Embodiment collaboration resulted in a composite dataset with about 1,000,000 demonstration trials, or about 10,000 hours. And this is only the beginning – an academic research prototype for a larger future system. We might also figure out how to effectively incorporate very large autonomously collected datasets via reinforcement learning methods (e.g., as demonstrated by Q-Transformer and PTR), leading to larger and cheaper datasets.

There are still big unanswered questions around cross-embodiment training: How general can such models be? What kind of experience provides positive transfer? Initial evidence is very promising: robotic manipulation policies become better when co-trained on data for robotic navigation, and we can even train policies on data from robots that walk on legs, fly in the air, and manipulation objects with two arms together. But these efforts are still in their infancy – more like GPT-2 than GPT-4 – and we still need plenty of research and realistic, large-scale datasets. However, if we can pool enough data and combine it with Internet-scale pretraining, that might allow us to bootstrap the next phase of robotic learning, where we move toward a robotic data flywheel that allows robots to improve on their own through autonomous deployment. That next phase is where the real power of robotic learning will be realized.

Yelling at Robots and Autonomous Self-Improvement

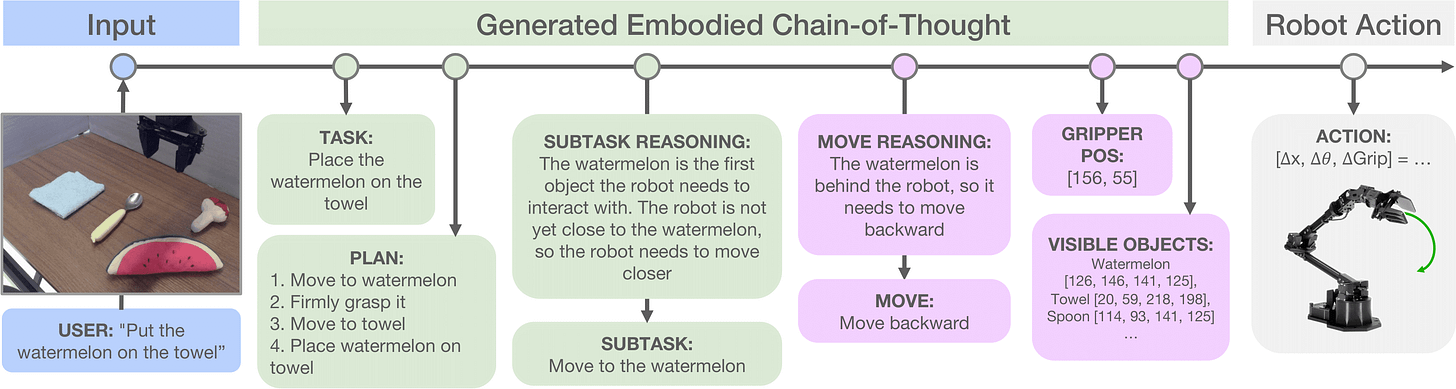

Once robots can get out into the real world, the amount of data and supervision available to them increases dramatically. The 10,000 McDonalds’ robots might gather huge amounts of physical experience, but the real benefit will come from our ability to put robots in situations that elicit natural supervision. One place this supervision can come from is interaction. As we all know, current AI systems are quite proficient at processing natural language, and one of the most obvious sources of knowledge will be feedback from people. Already we can build rudimentary systems for natural language feedback, where robots can take human speech, turn it into corrections, and incorporate these corrections into their behavior. The more we incorporate LLMs and VLMs into robotic control, the more opportunities there will be to incorporate such feedback. For example, in our work on embodied chain-of-thought, incorporating natural language feedback was so simple that it was essentially a footnote.

The other major source of feedback, which is harder to use but far more plentiful, is feedback from the physical world. Robots can observe the outcomes of their attempts, and use these outcomes to derive supervision. Reinforcement learning provides the algorithmic framework for such autonomous self-improvement, and it can be used both to specialize to specific downstream tasks that require high robustness, and improve generalist policies by having the robot “imagine” possible goals and then try to achieve, like a child making up games to play. In fact, this kind of self-directed reinforcement learning from autonomous experience, performed on top of powerful and general-purpose pretrained models, can offer us a route to robust and even superhuman robotic systems: general-purpose robotic foundation models can give the robot an initial “guess” at how to approach a particular task (e.g., flipping a pancake requires moving the pan in a vertical motion), and RL can allow it to perfect the task. We have preliminary evidence of this: in our recent work on RL for cable routing and PCB assembly, we see that robots trained with autonomous experience can reach 100% success rate on complex insertion tasks, achieving better results than models trained on the same amount of demonstration data as the RL algorithm has autonomous data, while achieving cycle times that are 2-3 times faster than that of imitation learned strategies.

Toward Physical Intelligence

But perhaps the most exciting thing about setting in motion the data flywheel is the potential to enable AI systems that truly understand the physical world. If we can make robots capable enough that they are worth deploying, the data flywheel enabled by fleets of useful deployed robots will lead to datasets of embodied experience that dwarf anything that can be collected from the web. This could not only make robots more capable, but it might become the dominant source of data for training AI systems in general, even outside of robotics applications. After all, while we can debate how much the Internet makes people more or less intelligent, there is no question that intelligent humans came about long before the advent of the web.

But we should also not underestimate the difficulty of the bootstrap problem: we will need more than academic research prototypes to build robotic systems that are useful enough to deploy, and we will need enough embodied experience that practically relevant physical capabilities can be glued to the semantic and visual reasoning skills inherited from Internet-scale pretraining. Just like the development of large language models, this is not just a research question, but it is also an industrial question: can we build systems, data collection efforts, and large-scale models that can combine the building blocks of generalist robot policies into robotic controllers that really work – at scale, across many embodiments, and in a wide range of real-world settings. Tackling the technological questions together with the practical challenges is the mission of Physical Intelligence, and I hope that I will soon be able to share the first steps we are taking in this direction.

Thanks to Karol Hausman and Chelsea Finn for comments and feedback on an early version of this article, thanks to Kevin Black for making an awesome robot demo, and thanks to Hannah Fry for pushing our robot to the limit.