Decisions from Data: How Offline Reinforcement Learning Will Change How We Use ML

Offline RL will change how we make decisions with data. How do offline RL methods work, and what are some open challenges in this field?

A ride sharing company collects a dataset of pricing and discount decisions with corresponding changes in customer and driver behavior, in order to optimize a dynamic pricing strategy. An online vendor records orders and inventory levels to generate an inventory level management policy. An autonomous car company records driving data from human drivers to train an improved end-to-end vision-based driving controller.

All of these applications have two things in common: we would consider each of them to be classic examples of how machine learning can enable smarter decisions with data, and each of them is not actually feasible with the kind of machine learning technologies that are widely in use today without manual design of decision rules and state machines. I will discuss how recent advances in the field of offline reinforcement learning can change that in the next few years. I believe that we stand on the cusp of a revolution in how data can inform automated decision-making. Outside of a few applications in advertising and recommender systems, ML-enabled decision making systems have generally relied on supervised learning methods for prediction, followed by manually designed decision rules that utilize these predictions to choose how to act. While reinforcement learning algorithms have made tremendous headway in providing a toolkit for automated end-to-end decision making in research, this toolkit has proven difficult to apply in reality because, in its most common incarnation, it is simply too hard to reconcile with the data-driven machine learning pipelines in use today. This may change once reinforcement learning algorithms can effectively use data, and I will discuss how that might happen.

How machine learning systems make decisions

First, we must draw a distinction between prediction and decision making. Supervised learning systems make predictions. These predictions can then be used to make decisions, but how a prediction turns into a decision is up to the user. If a model forecasts that customer orders will increase 200% in October, a reasonable decision is to increase inventory levels accordingly. However, the model is not telling us that increasing inventory levels will lead to larger profits. Not only does it not account for the distribution shift induced by acting on the model’s own predictions, it also fails to account for the entirety of the decision making process. Real-world decision making systems face a sequential and iterated problem, where each decision influences future events, which in turn influence future decisions. Here are some of the differences between the assumptions made by supervised predictive modeling systems and the properties of real-world sequential decision making problems:

Supervised prediction

Predicts manually selected quantities (e.g., number of customer orders)

Decisions must be made based on predictions manually, using human intuition and hand-crafted rules

Assumes i.i.d. (independent and identically distributed) data

Ignores feedback, which changes how inputs map to outputs when the learning system itself interacts with the world (e.g., customers may not react the same way to auto-generated recommendations as they did during data collection)

Sequential decision making

Only the objective is manually specified (e.g., maximize profits)

Requires outputting near-optimal actions that will lead to desired outcomes (e.g., how to alter inventory levels to maximize profits)

Each observation is part of a sequential process, each action influences future observations (not i.i.d.)

Feedback is critical, and may be utilized to achieve desired goals through long-term interaction

Reinforcement learning (RL) is concerned most directly with the decision making problem. RL has attained good results on tasks ranging from playing games to enabling robots to grasp objects. RL algorithms directly aim to optimize long-term performance in the face of a dynamic and changing environment that reacts to each decision. However, most reinforcement learning methods are studied in an active learning setting, where an agent directly interacts with its environment, observes the outcomes of its actions, and uses these attempts to learn through trial and error, as shown below.

Instantiating this framework with real-world data collection is difficult, because partially trained agents interacting with real physical systems require careful oversight and supervision (would you want a partially trained RL policy to make real-world inventory purchasing decisions?). For this reason, most of the work that utilizes reinforcement learning relies either on meticulously hand-designed simulators, which preclude handling complex real-world situations, especially ones with unpredictable human participants, or requires carefully designed real-world learning setups, as in the case of real-world robotic learning. More fundamentally, this precludes combining RL algorithms with the most successful formula in ML. From computer vision to NLP to speech recognition, time and time again we’ve seen that large datasets, combined with large models, can enable effective generalization in complex real-world settings. However, with active online RL algorithms that must recollect their dataset each time a new model is trained, such a formula becomes impractical. Here are some of the differences between the active RL setup and data-driven machine learning:

Active (online) reinforcement learning

Agent collects data each time it is trained

Agent must collect data using its own (partially trained) policy

Either uses narrow datasets (e.g., collected in one environment), or manually designed simulators

Generalization can be poor due to small, narrow datasets, or simulators that differ from reality

Data-driven machine learning

Data may be collected once and reused for all models

Data can be collected with any strategy, including a hand-engineered system, humans, or just randomly

Large and diverse datasets can be collected from all available sources

Generalization is quite good, due to large and diverse datasets

Offline reinforcement learning

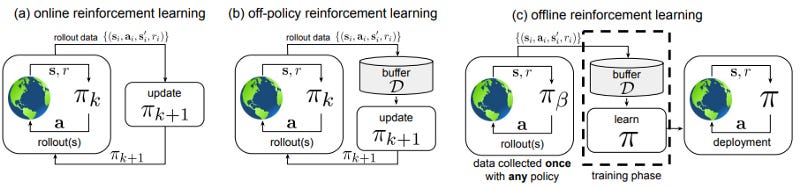

To perform effective end-to-end decision making in the real world, we must combine the formalism of reinforcement learning, which handles feedback and sequential decision making, with data-driven machine learning, which learns from large and diverse datasets, and therefore enables generalization. This necessitates removing the requirement for active data collection and devising RL algorithms that can learn from prior data. Such methods are referred to as batch reinforcement learning algorithms, or offline reinforcement learning (I will use the term “offline reinforcement learning,” since it is more self-explanatory, though the term “batch” is more common in the foundational literature). The diagram below illustrates the differences between classic online reinforcement learning, off-policy reinforcement learning, and offline reinforcement learning:

In online RL, data is collected each time the policy is modified. In off-policy RL, old data is retained, and new data is still collected periodically as the policy changes. In offline RL, the data is collected once, in advance, much like in the supervised learning setting, and is then used to train optimal policies without any additional online data collection. Of course, in practical use, offline RL methods can be combined with modest amounts of online finetuning, where after an initial offline phase, the policy is deployed to collect additional data to improve online.

Crucially, when the need to collect additional data with the latest policy is removed completely, reinforcement learning does not require any capability to interact with the world during training. This removes a wide range of cost, practicality, and safety issues: we no longer need to deploy partially trained and potentially unsafe policies, we no longer need to figure out how to conduct multiple trials in the real world, and we no longer need to build complex simulators. The offline data for this learning process could be collected from a baseline manually designed controller, or even by humans demonstrating a range of behaviors. These behaviors do not need to all be good either, in contrast to imitation learning methods. This approach removes one of the most complex and challenging parts of a real-world reinforcement learning system.

However, the full benefit of offline reinforcement learning goes even further. By making it possible to utilize previously collected datasets, offline RL can utilize large and diverse datasets that are only practical to collect once — datasets on the scale of ImageNet or MS-COCO, which capture a wide, longitudinal slice of real-world situations. For example, an autonomous vehicle could be trained on millions of videos depicting real-world driving. An HVAC controller could be trained using logged data from every single building in which that HVAC system was ever deployed. An algorithm that controls traffic lights to optimize city traffic could utilize data from many different intersections in many different cities. And crucially, all of this could be done end-to-end, training models that directly map rich observations or features directly to decisions that optimize user-specified objective functions.

How do offline reinforcement learning algorithms work?

The fundamental challenge in offline reinforcement learning is distributional shift. The offline training data comes from a fixed distribution (sometimes referred to as the behavior policy). The new policy that we learn from this data induces a different distribution. Every offline RL algorithm must contend with the resulting distributional shift problem. One widely studied approach in the literature is to employ importance sampling, where distributional shift can lead to high variance in the importance weights. Algorithms based on value functions (e.g., deep Q-learning and actor-critic methods) must contend with distributional shift in the inputs to the Q-function: the Q-function is trained under the state-action distribution induced by the behavior policy, but evaluated, for the purpose of policy improvement, under the distribution induced by the latest policy. Using the Q-function to evaluate or improve a learned policy can result in out-of-distribution actions being passed into the Q-function, leading to unpredictable and likely incorrect predictions. When the policy is optimized so as to maximize its predicted Q-values, this leads to a kind of “adversarial example” problem, where the policy learns to produce actions that “fool” the learned Q-function into thinking they are good.

Most successful offline RL methods address this problem with some type of constrained or conservative update, which either avoids excessive distribution shift by limiting how much the learned policy can deviate from the behavior policy, or explicitly regularizes learned value functions or Q-functions so that the Q-values for unlikely actions are kept low, which in turn also limits the distribution shift by dis-incentivizing the policy from taking these unlikely, out-of-distribution actions. The intuition is that we should only allow the policy to take those actions for which the data supports viable predictions.

Of course, at this point, we might ask — why should we expect offline RL to actually improve over the behavior policy at all? The key to this is the sequential nature of the decision making problem. While at any one time step, the actions of the learned policy should remain close to the distribution of behaviors we’ve seen before, across time steps, we can combine bits and pieces of different behaviors we’ve seen in the data. Imagine learning to play a new card game. Even if you play your cards at random, on some trials some of your actions will — perhaps by accident — lead to favorable outcomes. By looking back on all of your experiences and combining the best moves into a single policy, you can arrive at a policy that is substantially better than any of your previous plays, despite being composed entirely of actions that you’ve made before.

Building on these ideas, recent advances in offline reinforcement learning have led to substantial improvements in the capabilities of offline RL algorithms. A complete technical discussion of these methods is outside the scope of this article, and I would refer the reader to our recent tutorial paper for more details. However, I will briefly summarize several recent advances that I think are particularly exciting:

Policy constraints: A simple approach to control distributional shift is to limit how much the learned policy can deviate from the behavior policy. This is especially natural for actor-critic algorithms, where policy constraints can be formalized as using the following type of policy update:

The constraint, expressed in terms of some divergence (“D”), limits how far the learned policy deviates from the behavior policy. Examples include KL-divergence constraints and support constraints. This class of methods is summarized in detail in our tutorial. Note that such methods require estimating the behavior policy by using another neural network, which can be a substantial source of error.

Implicit constraints: The AWR and AWAC algorithms instead perform offline RL by using an implicit constraint. Instead of explicitly learning the behavior policy, these methods solve for the optimal policy via a weighted maximum likelihood update of the following form:

Here, A(s,a) is an estimate of the advantage, which is computed in different ways for different algorithms (AWR uses Monte Carlo estimates, while AWAC uses an off-policy Q-function). Using this type of update to enforce constraints has been explored in a number of prior works (see, e.g., REPS), but has only recently been applied to offline RL. Computing the expectation under the behavior policy only requires samples from the behavior policy, which we can obtain directly from the dataset, without actually needing to estimate what the behavior policy is. This makes AWR and AWAC substantially simpler, and enables good performance in practice.

Conservative Q-functions: A very different approach to offline RL, which we explore in our recent conservative Q-learning (CQL) paper, is to not constrain the policy at all, but instead regularize the Q-function to assign lower values to out-of-distribution actions. This prevents the policy from taking these actions, and results in a much simpler algorithm that in practice attains state-of-the-art performance across a wide range of offline RL benchmark problems. This approach also leads to appealing theoretical guarantees, allowing us to show that conservative Q-functions are guaranteed to lower bound the true Q-function with an appropriate choice of regularizer, providing a degree of confidence in the output of the method.

Despite these advances, I firmly believe that the most effective and elegant offline RL algorithms have yet to be invented, which is why I consider this research area to be so promising both for its practical applications today and for its potential as a topic of research in the future.

What about artificial intelligence?

Aside from its practical value, much of the appeal of reinforcement learning also stems from the widely held belief that reinforcement learning algorithms hold at least part of the answer to the development of intelligent machines — AI systems that emulate or reproduce some or all of the capabilities of the human mind. While a complete solution to this puzzle may be far in the future, I would like to briefly address the relevance of offline RL to this (perhaps distant) vision.

In its classical definition, the active learning framework of RL reflects a very reasonable model of an adaptive natural learning system: an animal observes a stimulus, adjusts its model, and improves its response to that stimulus to attain larger rewards in the future. Indeed, reinforcement learning originated in the study of natural intelligence, and only made its way into artificial intelligence later. It may therefore seem like a step in the wrong direction to remove the “active” part of this learning framework from consideration.

However, I would put forward an alternative argument: in the first few years of your life, your brain processed a broad array of sights, sounds, smells, and motor commands that rival the size and diversity of the largest datasets used in machine learning. While learning online from streaming data is definitely one facet of the AI problem, processing large and diverse experiences seems to be an equally critical facet. Current supervised learning methods operate far more effectively in “batch” mode, making multiple passes over a large dataset, than they do in “online” mode with streaming data. Cracking the puzzle of online continual learning may one day change that, but until then, we can make a great deal of progress with such batch-mode methods. It then stands to reason that a similar logic should be applied to RL: while understanding continual online learning is important, equally important is understanding large-scale learning and generalization, and these facets of the problem will likely be far more practical to tackle in the offline setting, and then extended into the online and continual setting once our understanding of online and continual algorithms catches up to our understanding of large-scale learning and generalization. Utilizing large amounts of data for decision making effectively will need to be a part of any generalizable AI solution, and right now, offline RL offers us the most direct path to study how to do that.

Concluding remarks

Offline reinforcement learning algorithms hold the promise of turning data into powerful decision-making strategies, enabling end-to-end learning of policies directly from large and diverse datasets and bringing large datasets and large models to bear on real-world decision-making and control problems. However, the full promise of offline RL has not yet been realized, and major technical hurdles remain. Fundamentally, offline RL algorithms must be able to reason about counterfactuals: what will happen if we take a different action? Will the outcome be better, or worse? Such questions are known to be exceptionally difficult for statistical machine learning systems, and while recent innovations in offline RL based around distributional constraints and conservative targets can provide a partial solution, at its core this problem touches on deep questions in the study of causality, distributional robustness, and invariance, and connects at a fundamental level with some of the most challenging problems facing modern machine learning. While this will present major challenges, it also makes this topic particularly exciting to study.

For readers interested in learning more about this topic, I would recommend a tutorial article that I’ve co-authored with colleagues on this subject, as well as the “Datasets for Data-Driven Deep Reinforcement Learning” benchmark suite, which includes code and implementations for many of the latest algorithms. Aviral Kumar and I will also be giving a tutorial on offline reinforcement learning at NeurIPS 2020. Hope to see you there!

I want to acknowledge helpful feedback from Chelsea Finn and Aviral Kumar on an earlier draft of this article.